Learn how a simple K nearest neighbor classification works

In [3]:

#@title Run this cell to complete the setup for this Notebook

from IPython import get_ipython

ipython = get_ipython()

notebook="M0W1_EXP_3_KNN_weight_sphericity" #name of the notebook

def setup():

# ipython.magic("sx pip3 install torch")

ipython.magic("sx wget https://cdn.talentsprint.com/aiml/Experiment_related_data/kNN_weight_sphericity_train.csv")

ipython.magic("sx wget https://cdn.talentsprint.com/aiml/Experiment_related_data/kNN_weight_sphericity_test.csv")

print ("Setup completed successfully")

return

def submit_notebook():

ipython.magic("notebook -e "+ notebook + ".ipynb")

import requests, json, base64, datetime

url = "https://dashboard.talentsprint.com/xp/app/save_notebook_attempts"

if not submission_id:

data = {"id" : getId(), "notebook" : notebook, "mobile" : getPassword()}

r = requests.post(url, data = data)

r = json.loads(r.text)

if r["status"] == "Success":

return r["record_id"]

elif "err" in r:

print(r["err"])

return None

else:

print ("Something is wrong, the notebook will not be submitted for grading")

return None

elif getAnswer() and getComplexity() and getAdditional() and getConcepts():

f = open(notebook + ".ipynb", "rb")

file_hash = base64.b64encode(f.read())

data = {"complexity" : Complexity, "additional" :Additional,

"concepts" : Concepts, "record_id" : submission_id,

"answer" : Answer, "id" : Id, "file_hash" : file_hash,

"notebook" : notebook}

r = requests.post(url, data = data)

r = json.loads(r.text)

print("Your submission is successful.")

print("Ref Id:", submission_id)

print("Date of submission: ", r["date"])

print("Time of submission: ", r["time"])

print("For any queries/discrepancies, please connect with mentors through the chat icon in LMS dashboard.")

return submission_id

else: submission_id

def getAdditional():

try:

if Additional: return Additional

else: raise NameError('')

except NameError:

print ("Please answer Additional Question")

return None

def getComplexity():

try:

return Complexity

except NameError:

print ("Please answer Complexity Question")

return None

def getConcepts():

try:

return Concepts

except NameError:

print ("Please answer Concepts Question")

return None

def getAnswer():

try:

return Answer

except NameError:

print ("Please answer Question")

return None

def getId():

try:

return Id if Id else None

except NameError:

return None

def getPassword():

try:

return password if password else None

except NameError:

return None

submission_id = None

### Setup

if getPassword() and getId():

submission_id = submit_notebook()

if submission_id:

setup()

else:

print ("Please complete Id and Password cells before running setup")

In [0]:

## Let us set up the file names

FRUITS_TRAIN = "kNN_weight_sphericity_train.csv"

FRUITS_TEST1 = "kNN_weight_sphericity_test.csv"

In [0]:

# Let us first read the data from the file and do a quick visualization

import pandas as pd

train = pd.read_csv(FRUITS_TRAIN)

train

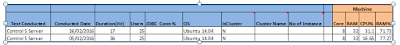

Out[0]:

In [0]:

import matplotlib.pyplot as plt

In [0]:

apples=train[train['Label']=='A']

oranges=train[train['Label']=='O']

In [0]:

plt.plot(apples.Weight, apples.Sphericity, "ro")

plt.plot(oranges.Weight, oranges.Sphericity, "bo")

plt.xlabel("Weight -- in grams")

plt.ylabel("Sphericity -- r-o-y-g-b-p")

plt.legend(["Apples", "Oranges"])

plt.plot([373], [1], "ko")

plt.show()

In [0]:

import math

def dist(a, b):

''' a is the n-dimesnional co-ordinate of point 1

b is the n-dimensional co-ordinate of point 2'''

sqSum = 0

for i in range(len(a)):

sqSum += (a[i] - b[i]) ** 2

return math.sqrt(sqSum)

In [0]:

def kNN(k, train, given):

distances = []

for t in train.values:

# loop over all training samples

distances.append((dist(t[:2], given), t[2]))

# compute and store distance of each training sample from the given sample

distances.sort()

return distances[:k] # return first k samples = nearest k distances to the given sample

In [0]:

print(kNN(3, train, (373, 1)))

print(kNN(5, train, (373, 1)))

In [0]:

print(kNN(7,train, (250,1)))

print(kNN(5,train, (250,1)))

In [0]:

import collections

def kNNmax(k, train, given):

tally = collections.Counter()

for nn in kNN(k, train, given):

tally.update(nn[-1])

return tally.most_common(1)[0]

print(kNNmax(5, train, (340, 1)))

print(kNNmax(7, train, (340, 1)))

In [0]:

testData = pd.read_csv(FRUITS_TEST1).values[:,:-1]

testResults = pd.read_csv(FRUITS_TEST1).values[:,-1]

results = []

for i, t in enumerate(testData):

results.append(kNNmax(3, train, t)[0] == testResults[i])

print(results.count(True), "are correct")

In [0]:

#@title Linear classifiers learn a separating hyperplane from the training samples. Run the following cell to create a Linear Classifier and plot the output.

from sklearn import preprocessing

le = preprocessing.LabelEncoder()

import numpy as np

from sklearn import linear_model

from sklearn.preprocessing import MinMaxScaler

scaler=MinMaxScaler()

X2 = train.iloc[:,:-1].values#np.array([[2,1],[3,4],[4,2],[3,1]])

X=scaler.fit_transform(X2)

Y = le.fit_transform(train.iloc[:,-1].values)

h = .02 # step size in the mesh

#clf2 = Perceptron(max_iter=1000).fit(X, Y)

clf2 = linear_model.SGDClassifier(max_iter=1000, tol=1e-3)

clf2.fit(X,Y)

# create a mesh to plot in

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, h),

np.arange(y_min, y_max, h))

# Plot the decision boundary. For that, we will assign a color to each

# point in the mesh [x_min, m_max]x[y_min, y_max].

fig, ax = plt.subplots()

Z = clf2.predict(np.c_[xx.ravel(), yy.ravel()])

# Put the result into a color plot

Z = Z.reshape(xx.shape)

ax.contourf(xx, yy, Z, cmap=plt.cm.Paired)

ax.axis('off')

# Plot also the training points

ax.scatter(X[:, 0], X[:, 1], c=Y)

ax.set_title('Linear Classfier')

In [0]:

## Your code here

print(kNN(5, train, (373, 1)))

print(kNN(7, train, (373, 1)))

In [0]:

## Your code here

print(kNN(17, train, (373, 1)))

In [0]:

import math

def dist(a, b):

''' a is the n-dimesnional co-ordinate of point 1

b is the n-dimensional co-ordinate of point 2'''

sqSum = 0

for i in range(len(a)-1):

sqSum += (a[i] - b[i]) ** 2

return math.sqrt(sqSum)

Comments

Post a Comment